The gen_event behavior is typically presented in the context of the

most obvious tasks it can be applied to: logging and alarm

handling1. This presentation undersells what an interesting

behavior it really is. For one thing, the runtime structure of a

gen_event event manager and its associated callback modules is

fundamentally different from every other OTP behavior. Multiple

callback modules is just a small part of what makes OTP event managers

interesting. The add_sup_handler/3 function enables a very different

kind of supervision than what is typical of processes implementing the

supervisor behavior. Before discussing this new (to me) perspective

I’ll lay out the problem that led me to the realization.

Handling failures without killing everything

I ran up against an interesting problem that pushed me to learn

something new while working on an Erlang implementation of the Open

Charge Point Protocol (my “practice Erlang” side project). The

application includes a gen_statem process that models the state of

each charging station with respect to the protocol

(ocpp_station). This state machine is persistent, even when the

station is not connected to the management system. To handle requests

sent from the station to the management system a custom ocpp_handler

behavior is implemented by charging station management system

developers to provide callbacks that handle OCPP messages. Whenever

the state machine needs a decision from the “business logic” it sends

an asynchronous notification to the process running the ocpp_handler

code and waits for another event.

The interesting problem arises because this handler might crash and

must be restarted to handle more requests that (hopefully don’t cause

another failure). When it crashes, however, the ocpp_station state

machine should not be affected at all. In fact, the ocpp_station

module shouldn’t even need to know that the handler crashed. It should

simply receive an event instructing it to send an “internal error”

message to the charging station and transition to whatever its next

state is.

The obvious (to me at least) first approach is to start and monitor

the handler from ocpp_station state machine. We can’t just rely on

the supervisor since the state machine must know the new Pid. Because

the state machine must know the Pid of the handler it must be

responsible for restarting it when it fails (rather than letting the

supervisor do it). This starts to look like a very ugly mixing of

concerns. To capture the complexity of the Open Charge Point Protocol,

the state machine is already very complicated; adding the complexity

required for monitoring and restarting processes will make it

substantially harder to understand how it works.

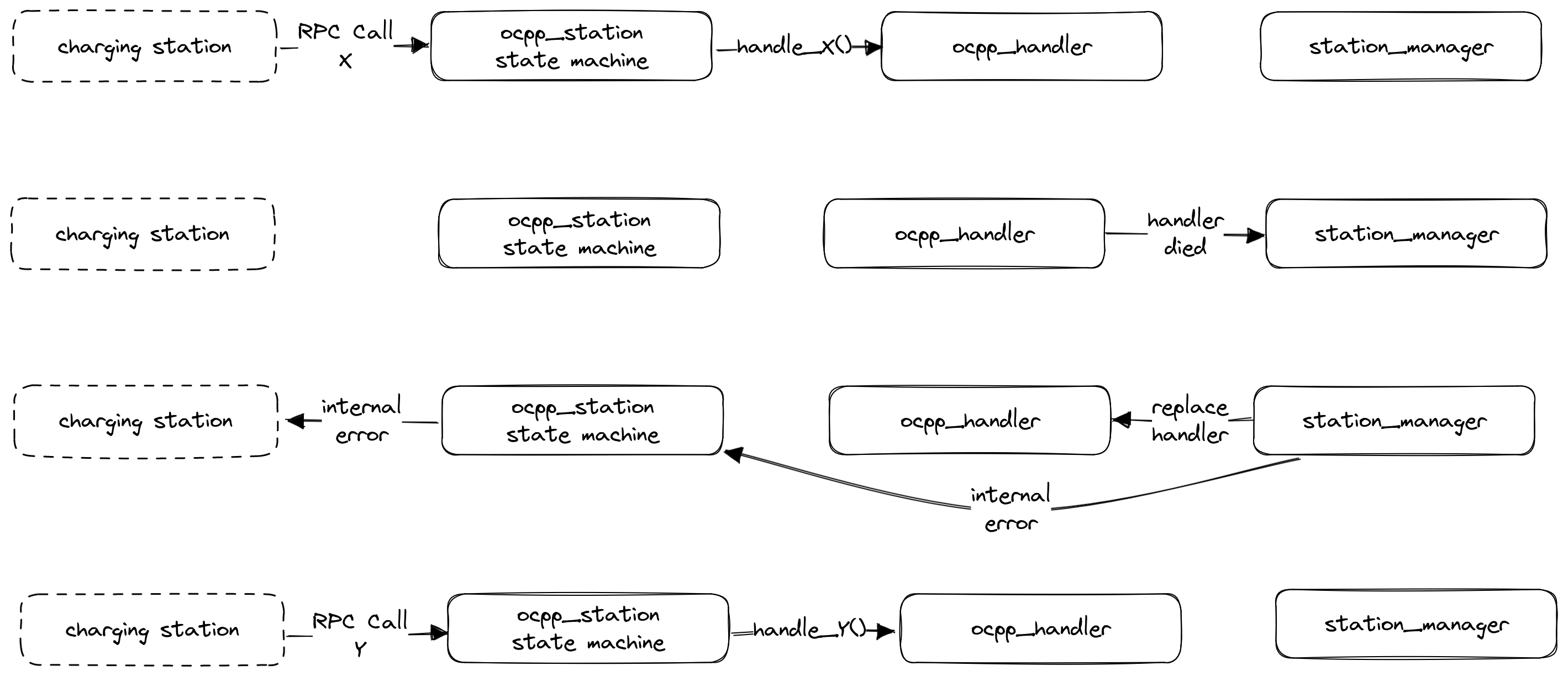

I could just move the monitoring and restarting function to another

process, but that only provides an illusion of decoupling. A station

manager process is responsible for starting the state machine and

brokering certain interactions. That process is an obvious candidate

for this functionality since it is in a good position to inform the

state machine when the handler crashes. When the manager receives a

signal that the handler has crashed it restarts the handler, instructs

the state machine to send an “internal error” message to the charging

station, then sends the Pid of the new handler to the state

machine. This is slightly better because the monitoring and restarting

logic are not part of ocpp_station; however, it still requires extra

logic in the state machine that is unrelated to the protocol. The best

solution is one that is completely transparent to the state machine.

Why does this seem harder than it should be?

TL;DR A digression about how applications for different protocols might handle the same problem.

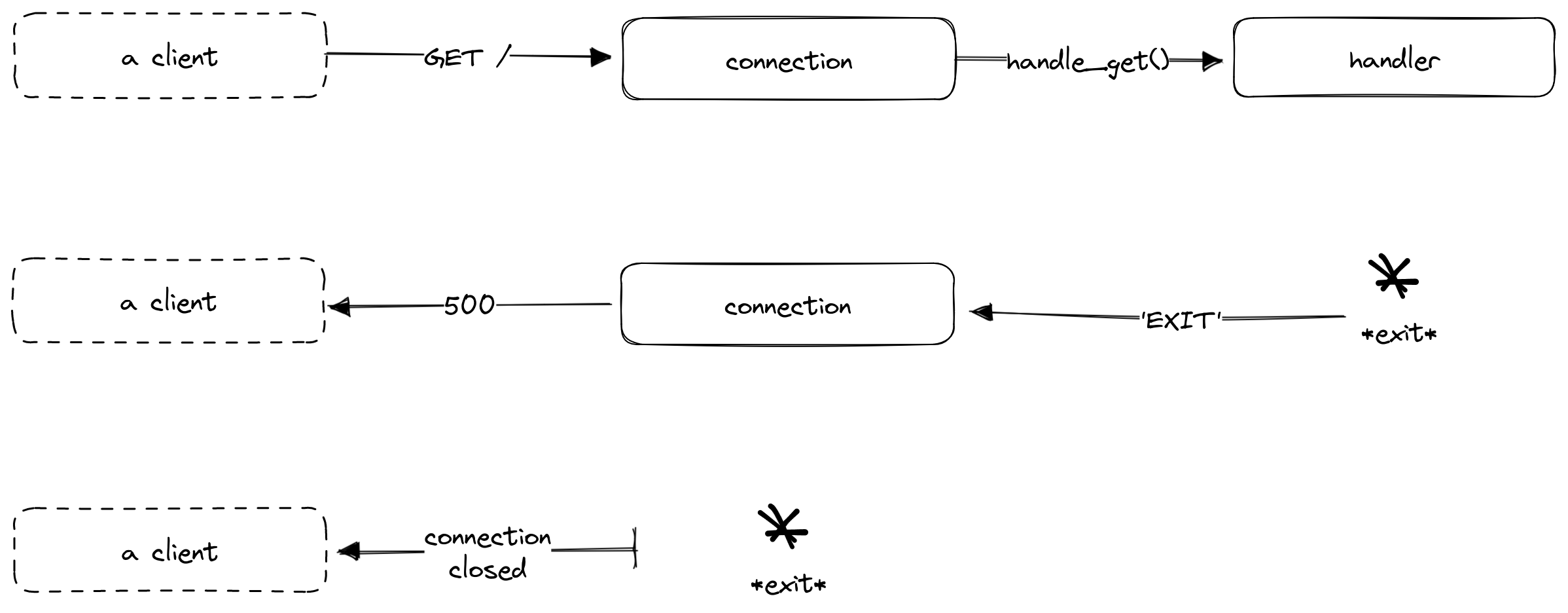

This problem doesn’t seem unique. For example, a very similar problem comes up in a simple HTTP server application. Suppose the application lets you implement your own handler module to process requests. Whenever a new connection is established the application a connection manager process and a handler process. The connection manager transforms requests into Erlang terms and sends them to the handler. The handler processes the request and sends a reply to the manager which transforms it into a properly structured HTTP response and sends it to the client. When the handler crashes an exit signal is sent to the connection manager which can inform the client of the error before closing the connection and exiting itself. This is illustrated below.

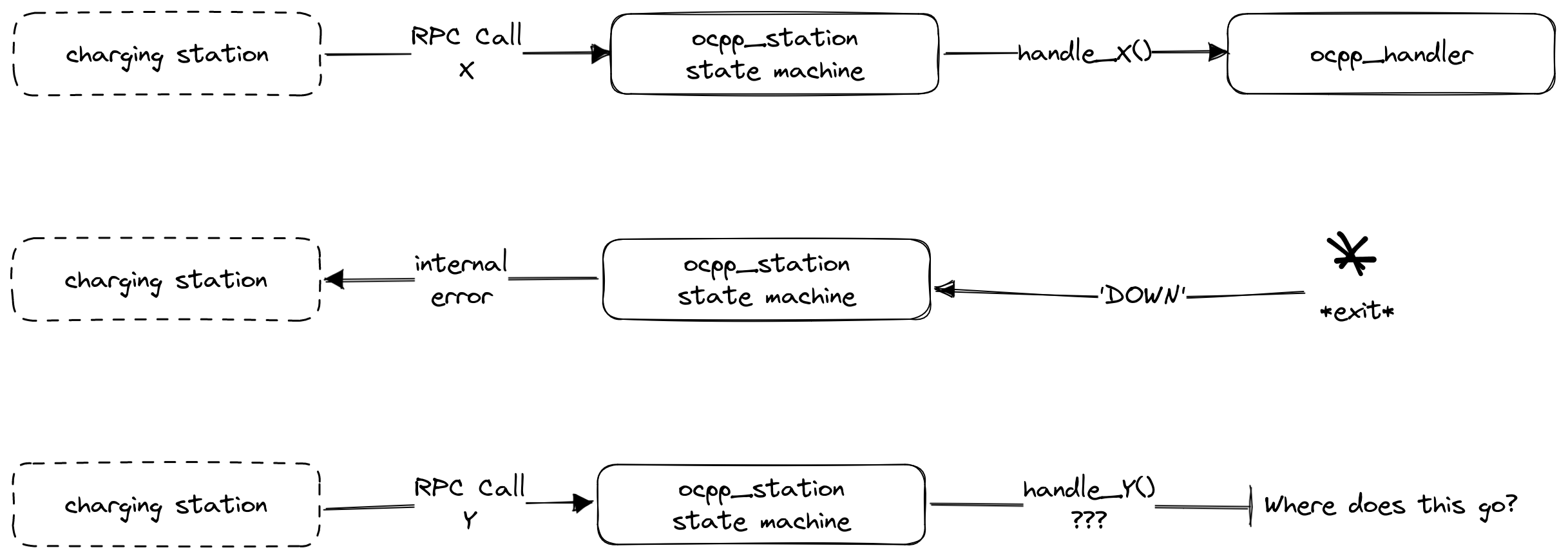

When there is an internal error in an HTTP server the connection can be closed and all the processes involved in handling requests for that connection can exit. Compare this to OCPP and it is clear why dealing with handler failures is more challenging. OCPP requires the connection to stay open and the management system to maintain its knowledge of the state of the charger. This is illustrated in the figure below.

Event Handlers & Transparent Supervision

To keep the state machine process in blissful ignorance of handler

failures it must be able to send requests that need to be handled to

the same process, even after the handler has crashed and been

restarted. The best way to make this happen is by introducing

indirection. Instead of a process that directly runs the handler we

need to use a process that manages the handler state and only runs the

handler within a try ... catch expression. When it catches and

error or an exit it can send the “internal error” message to the

state machine and then reinitialize the handler to get ready for the

next request.

Implementing this structure with a gen_server would be a

straightforward task, but it is unnecessary. The gen_event behavior

already implements this for us! We use the station manager process to

install the handler and supervise it. When it fails the station

manager re-initializes and re-installs the handler before sending an

“internal error” message to the state machine. The gen_event

solution is illustrated below. Using gen_event also means we can

install additional handlers that we may want such as loggers and

alarms

One small problem

Unfortunately, this solution might have a small problem. There is a potential race condition caused by the asynchronous way the supervised handler is replaced after it fails. OCPP requires that a station does not send any messages while awaiting a reply for a previous message sent to the management system. However, a timeout allowing the station to retry its last message could coincide with the failure of the handler and lead to another message arriving at the state machine between when the handler crashed and the when it is reinstalled. The sequence is shown below.

If the state machine is able to defer the new request from the station

until it receives a message from the handler or the manager then the

race condition is not a problem. However, if it is unable to defer the

message (e.g. because it isn’t in a state that indicates it is

awaiting an internal event) then the race is a problem. Even deferring

requests like this may be undesirable if it adds too much complexity

to the state machine. I’ll have to keep this in mind as I am

implementing this. If I don’t like the way it works I can build the

generic parts of the ocpp_handler behavior so that they reinitialize

the handler while blocking the event manager to guarantee that no events

can be processed while the handler is not installed and running.

Conclusion

The fact that handlers installed in an OTP event manager can crash and

be replaced without any processes that interact with that handler

knowing is very useful. Thinking about this as transparent

supervision, as opposed to “standard” supervision where the process is

completely replaced highlights this behavior for me and reminds me to

look for other places I can replace monitoring and restarting logic

with a supervised gen_event handler.

-

Learn You Some Erlang presents a different application for this very reason. ↩︎